Build a file conversion service by Django, Celery, and Docker

I recently need to convert some files with pdf or pptx format into jpg format for our product. The concrete scenario is that when the service gets a file from a caller, the service is going to extract text information and convert files into images, then return them to the caller. Building this service have faced many problems, so I wanted to take some notes and explain clearly for it.

File conversion

Converting files with ppt or pptx format into images format is not an easy work. You would require a rendering engine for converting. Fortunately, LibreOffice or OpenOffice can help us to do so. I chose unoconv to call the office packages easily by the headless commands. However, unoconv can only convert the first page for ppt or pptx, the rest of pages will not be converted. So the process of solution is divided into two steps: ppt(x) -> pdf, pdf -> jpg. Although unoconv can also convert pdf into jpg, I used ImageMagic to do the second step.

Why did I use ImageMagic rather than an office application? Because running an office application in background to convert files cost memory usage more than 500MB. In production environment, many processes would be running simultaneously on our machines. I wanted to reduce memory usage.

Before converting files, there was another problem need to be fixed. Fonts were the main issues about the quality of converting results. Microsoft’s fonts have been used widely in presentation. I prepared some popular fonts like Times New Roman by following the part of installations on this article. Otherwise, Chinese fonts were considered as well. Two docker files describe the detail below:

- LibreOffice, unoconv, and the prepared fonts (Dockerfile)

- ImageMagic on Alpine (Dockerfile)

I installed ImageMagic on Alpine instead of Ubuntu to minimize docker image size. I skip text information extracting here. I think the issue is quite simple than images, I just used some Python packages to complete it.

Asynchronous tasks

I used Celery to handle asynchronous tasks. Each file will be scheduled into several asynchronous tasks after our service receives it. In Celery, I think people more often use shortcut s instead of Signature. Most of our code wrote for Signature is to use shortcut s. I was confusing on in some particular situations it doesn’t work, like using options in chain. We used options to set task id we generated beforehand. Full name of Signature is required within the chain, the example looks like this:

chain = taskA.signature((args,), task_id=task_ids['taskA'])

chain |= taskB.signature((args,), task_id=task_ids['taskB'])

chain |= taskC.signature((args,), task_id=task_ids['taskC'])

chain.apply_async()

Using a chaining set is another solution provided from Canvas:

You can’t define options with s(), but a chaining set call takes care of that:

>>> add.s(2, 2).set(countdown=1)

proj.tasks.add(2, 2)

Work together as a service

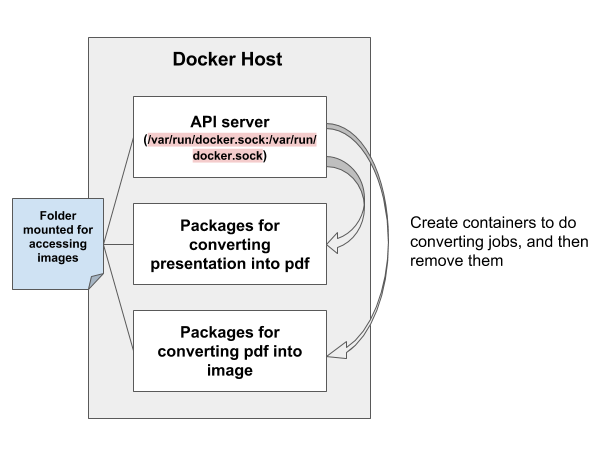

The system diagram shows how those components works together in the docker environment. For accessing files, all containers have to mount the same folder to make sure using the correct files in the next task for converting. API server built by Django and Celery is to control all processes including interacting with other services and scheduling tasks. Besides mounting folder, the API server also mount /var/run/docker.sock:/var/run/docker.sock to run docker command inside the container.

I think the project can really learn about how to build an asynchronous tasks service. Still having some issues were not explained, like communication between API server and other backend servers by multipart/form-data which is significantly complicated but can send files and data at the same time.